The Unicode Sandwich

Best Practice for handling Strings and Characters in Python

You might think that understanding Unicode is not important, but there is no escaping the str versus byte divide.

Today we will be talking about the mundane but still relevant topics of Unicode, Encoding and Decoding text.

What makes Unicode so vital?

Unicode provides multiple ways of representing certain characters, so normalizing is a prerequisite to solving any problem that deals with text. We'll begin by looking at the common issues with characters and then talk about some Byte principles in Python.

Common Character Issue

What is a Character?

There are multiple answers to this question:

A character is a single visual object used to represent text.

A character is a single unit of information.

A Unicode character.

As of 2021, we have a new definition, a universal one following the international character encoding standard that makes almost all characters accessible across platforms, programs, and devices. It's the Unicode Character.

What is The Identity of a Character?

The identity of a character is a Code Point, it’s a number from 0 to 1,114,111 (base 10), shown in the Unicode standard as 4 to 6 hex digits with a “U+” prefix. For example letter A is U+0041.

What is The Byte Representation of a Character?

The actual bytes or the byte representation is an output of the Encoding algorithm that was used to convert the Code Point to byte sequences. For Example, the character 'A' (U+0041) is encoded as the single byte \x41 in the UTF-8 encoding.

What are Encoding and Decoding?

Encoding is the process of converting from Code Points to Bytes.

Decoding is the process of converting from Bytes to Code Points.

s = 'café'

b = s.encode('utf8')

print(b)

# 'caf\xc3\xa9'

# The code point for “é” is encoded as two bytes in UTF-8

s_decoded = b.decode('utf8')

print(s_decoded)

# 'café'

Some Byte Manipulation Essentials

There are 2 Built-in Types for binary sequences:

The immutable

bytestype.The mutable

bytearraytype.

Each item in bytes or bytearray is an integer from 0 to 255:

cafe = bytes('café', encoding='utf_8')

print(cafe)

# b'caf\xc3\xa9'

print(cafe[0])

# 99

cafe_arr = bytearray(cafe)

print(cafe_arr)

# bytearray(b'caf\xc3\xa9')

Binary sequences are simply a set of integers, however, their notation indicates that ASCII text is usually included in them. We can notice that

Bytes with decimal codes 32 to 126: the ASCII character itself is used.

Bytes corresponding to tab, newline, carriage return, and “\”: the escape

sequences \t, \n, \r, and \ are used.If both string delimiters ‘ and “ appear in the byte sequence, the whole sequence is delimited by ‘, and any ‘ inside are escaped as \’.

For other byte values, a hexadecimal escape sequence is used ( \x00 is the null byte)

This is why we see (b’caf\xc3\xa9'): the first three bytes b’caf’ are in the printable ASCII range, but the last two are not.

One last thing to bring up, other than the str methods that involve formatting (format, format_map) and those that rely on Unicode data, bytes and bytearray support every str method.

Encoders and Decoders in Python

The Python distribution bundles more than 100 codecs (encoder/decoders) for text-to-byte conversion and vice versa.

Each codec has a name, like utf_8, and often aliases, such as utf8, utf-8, and U8, which you can use as the encoding argument in functions like open(), str.encode().

Here’s an example of the same String encoded in different encodings:

for codec in ['latin_1', 'utf_8', 'utf_16']:

print(codec, 'El Niño'.encode(codec), sep='\t')

# Same sentence El Niño encoded in latin_1, utf_8 and utf_16

# latin_1 b'El Ni\xf1o'

# utf_8 b'El Ni\xc3\xb1o'

# utf_16 b'\xff\xfeE\x00l\x00 \x00N\x00i\x00\xf1\x00o\x00'

You can find the full list of codecs in the Python Codec registry.

Where's the Dirt? Encode and Decode Problems

The UnicodeError Exception can appear occasionally when we attempt to encode a string or decode a binary sequence using the incorrect encoding.

The exception is usually more specific:

UnicodeEncodeError: when converting str to binary

sequences.UnicodeDecodeError: when reading binary sequences into str.

There is no specific way to deal with this other than to find out the correct encoding of the byte sequence.

Some Packages can help like chardet Python Package can help, which has more than 30 supported encodings, but overall it’s still guesswork.

To cope with the errors and the exception, you could either replace or ignore them, and you deal with them depending on your app context.

city = 'São Paulo'

print(city.encode('utf_8'))

# b'S\xc3\xa3o Paulo'

print(city.encode('cp437'))

# UnicodeEncodeError: 'charmap' codec can't encode character '\xe3'

print(city.encode('cp437', errors='ignore'))

# b'So Paulo'

print(city.encode('cp437', errors='replace'))

# b'S?o Paulo'

octets = b'Montr\xe9al'

print(octets.decode('cp1252'))

# 'Montréal'

print(octets.decode('koi8_r'))

# 'MontrИal'

print(octets.decode('utf_8'))

# UnicodeDecodeError: 'utf-8' codec can't decode byte 0xe9 in position 5

print(octets.decode('utf_8', errors='replace'))

# 'Montr�al'

Normalizing Unicode as a Solution

String manipulation is complicated by the fact that Unicode has combining characters such as diacritics and other marks that attach to the preceding character.

For example, the word “café” may be composed in two ways:

s1 = 'café'

s2 = 'cafe\N{COMBINING ACUTE ACCENT}'

print(s1, s2)

# ('café', 'café')

print(len(s1), len(s2))

# (4, 5)

print(s1 == s2)

# False

The solution is to use the unicodedata.normalize() function.

The first argument to this function is one of four Unicode Normalization Forms

Normalization Form C (NFC) composes the code points to produce the shortest equivalent string.

Normalization Form D (NFD) decomposes the code points, expanding composed characters into base characters and separate combining characters.

Normalization Form KC (NFKC) is the same as NFC only stronger affecting compatibility characters.

Normalization Form KD (NFKD) is the same as NFD only stronger affecting compatibility characters.

Unicode Normalization Forms are formally defined normalizations of Unicode strings which make it possible to determine whether any two Unicode strings are equivalent to each other.

s1 = 'café'

s2 = 'cafe\N{COMBINING ACUTE ACCENT}'

print(len(normalize('NFC', s1)), len(normalize('NFC', s2)))

# (4, 4)

print(len(normalize('NFD', s1)), len(normalize('NFD', s2)))

# (5, 5)

print(normalize('NFC', s1) == normalize('NFC', s2))

# True

print(normalize('NFD', s1) == normalize('NFD', s2))

# True

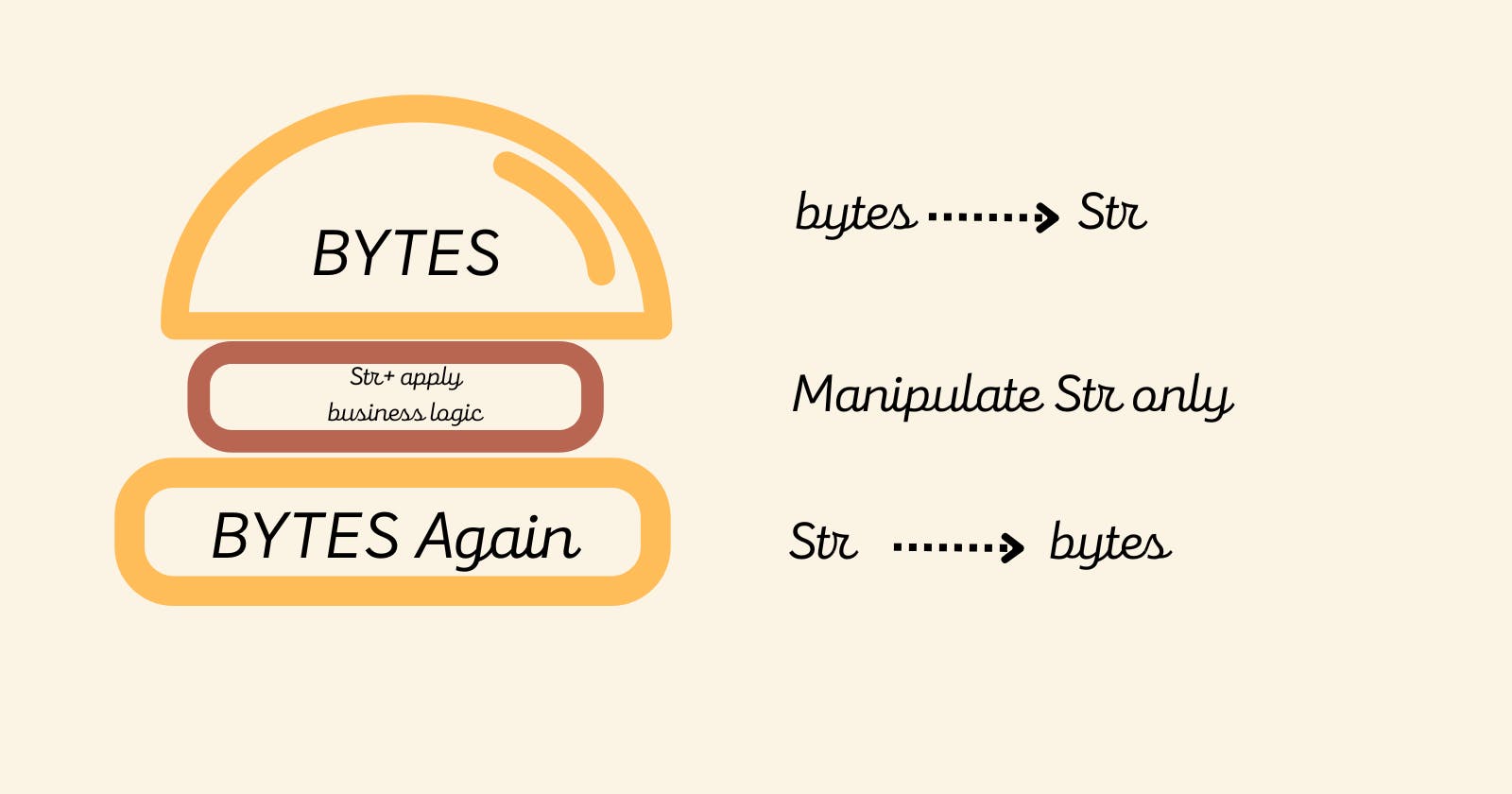

So what is The Unicode Sandwich?

The Unicode sandwich is the Best Practice for handling Strings, it consists of 3 steps

Decode to

stras soon as possible.Apply your business logic.

Encode back to

bytesas late as possible.

Conclusion

We got a bit technical in this article but it is only the tip of the iceberg as there is a huge amount of subtle bugs that can creep into our app because of Unicode issues, the simplest one would be to compare if two strings are equal and much much more.

Further Reading

Now, this was a brief overview of Unicode Text and Bytes in Python. It’s a heavy subject that we cannot dive into all of it in just one article. So here’s a list of links that can help your research as they did mine:

The full article is on my Blog.

The official “Unicode HOWTO” in the Python docs.

Chapter 2, “Strings and Text” of the book: The Python Cookbook, 3rd ed

Nick Coghlan’s “Python Notes” blog has two posts very relevant to this chapter: “Python 3 and ASCII Compatible Binary Protocols” and “Processing Text Files in Python 3”. Highly recommended.

List of encodings supported in Python.

The book Unicode Explained by Jukka K. Korpel.

The book Programming with Unicode.

Chapter 4 of the book Fluent Python.